Visual Tool Helps Users Moderate Their Language When Platforms Flag Their Comments as Toxic

Healthy online communities might at some point have an element of toxic behavior from a few bad actors. Then there are those web forums that breed it.

With the widespread use of toxic language online, platforms are increasingly using automated systems that leverage advances in natural language processing – where computers analyze large amount of human text data – to automatically flag and remove toxic comments. However, most automated systems, when detecting and moderating toxic language, do not provide feedback to their users or provide a way for these users to make actionable changes in their comments.

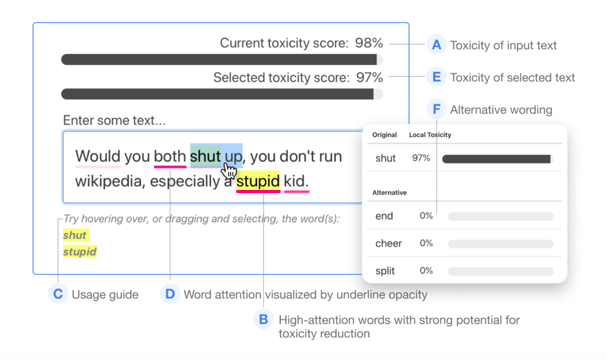

A research team from Georgia Tech and Mailchimp has developed an interactive, open-sourced web tool, dubbed RECAST, for visualizing these automated systems’ toxic predictions, while providing alternative suggestions for flagged toxic language.

“Our tool provides users with a new path of recourse when using these automated moderation tools,” wrote Austin Wright, a Ph.D. student in computer science and lead researcher.

“RECAST highlights text responsible for classifying toxicity, and allows users to interactively substitute potentially toxic phrases with neutral alternatives,” Wright wrote as part of the findings.

The team examined the effect of RECAST via two large-scale user evaluations, and found that it was highly effective at helping users reduce toxicity as detected through the model. Users also gained a stronger understanding of the underlying toxicity criterion used by black-box models, enabling transparency and recourse for users.

In addition, they found that when users focus on optimizing language for these automated models instead of their own judgement, these models cease to be effective classifiers of toxicity compared to human annotations. This opens a discussion for how toxicity detection models work and should work, and their effect on the future of online discourse, according to results from the study.

Evaluating the Effectiveness of Deplatforming as a Moderation Strategy on Twitter

Authors: Shagun Jhaver (Rutgers University), Christian Boylston (Georgia Tech), Diyi Yang (Georgia Tech), Amy Bruckman (Georgia Tech)

When in Doubt, Kick Them Out?

What happens when a prominent social influencer’s speech becomes too offensive to be ignored? When the words they say or the content they share raises toxicity to unhealthy and even dangerous levels?

Is the best course of action simply to remove them from the platform entirely?

A team of Georgia Tech researchers evaluated the effects of such a ban — called “deplatforming” — examining the cases of three prominent individuals who had been removed from Twitter for their speech: Alex Jones, Milo Yiannopoulos, and Owen Benjamin.

Rather than examine when such an approach might be advisable or how it might be perceived as an affront to one’s protected speech, the team simply examined the key question: What happens when someone is deplatformed?

Working with over 49 million tweets, they found that permanent bans significantly reduced the number of conversations on Twitter about the individuals. Additionally, overall activity and toxicity levels of supports subsequently declined, as well.

The paper offers broader examinations of the practice as well as its implications.

Remote Education in a

Post-Pandemic World

Educators once reluctant to implement computing technologies into everyday classroom settings have found themselves with little choice but to embrace it. The ongoing COVID-19 pandemic has forced a global shift from in-person learning to increasingly remote digital environments.

It’s one thing to make such a seismic shift in areas with fewer constraints, but what about underserved populations? How have they made the transition despite a relative lack of digital resources?

A team of researchers conducted interviews with students, teachers, and administrators from underserved contexts in India and found that online learning in these settings relied on resilient human infrastructures to help overcome technological limitations.

The goal of the research is to inform future educational technology design post-pandemic, outlining areas for improvement in design of online platforms in resource-constrained settings.

The Pandemic Shift to Remote Learning Under Resource Constraints

Authors: Prerna Ravi (Georgia Tech), Azra Ismail (Georgia Tech), Neha Kumar (Georgia Tech)